Apple Inc. builds great computers. Well, at least the hordes of slave workers over at Asia do. Kudos to them for building astonishing precision and the design team to create beautiful machines that feel great when working with them. Shame on this $500bn+ company for taking advantage on such cheap labour, spending .5% of their margin on working conditions would provide hundreds of thousands of families a much better perspective and fair work. But lets put that aside for a moment.

A Mac can easily be sold for over 60% if its initial price two years from now. Try this with an Asus, Sony, Acer, HP, what have you. Almost all other Laptops i’ve seen so far - since IBM dumped the Thinkpad - look like a piece of junk after two years of transportation and serious usage (like 8-12 hours a day)

Now this is where the issue starts: Macs are re-sold regularly and people are trying to get their hands on a pre-owned device to cut some of the initial cost. On the other hand, Apple has built the perfect golden cage for it’s users. Services, hardware, software, complementary products - all very well integrated and made to work with each other quite smoothly (well, most times at least…). When buying a Mac, one does not just buy a computer but enters the realm of multiple services spun around the users “digital lifestyle” that tries to keep the user at the chosen platform. While hardware may and is meant to become obsolete, these services stay and are assigned to a user (via it’s Apple-ID) rather than to a specific piece of hardware. Obviously Apple is very successful in retaining users to proprietary services so they won’t flee back to good ol’ Windows or Android world.

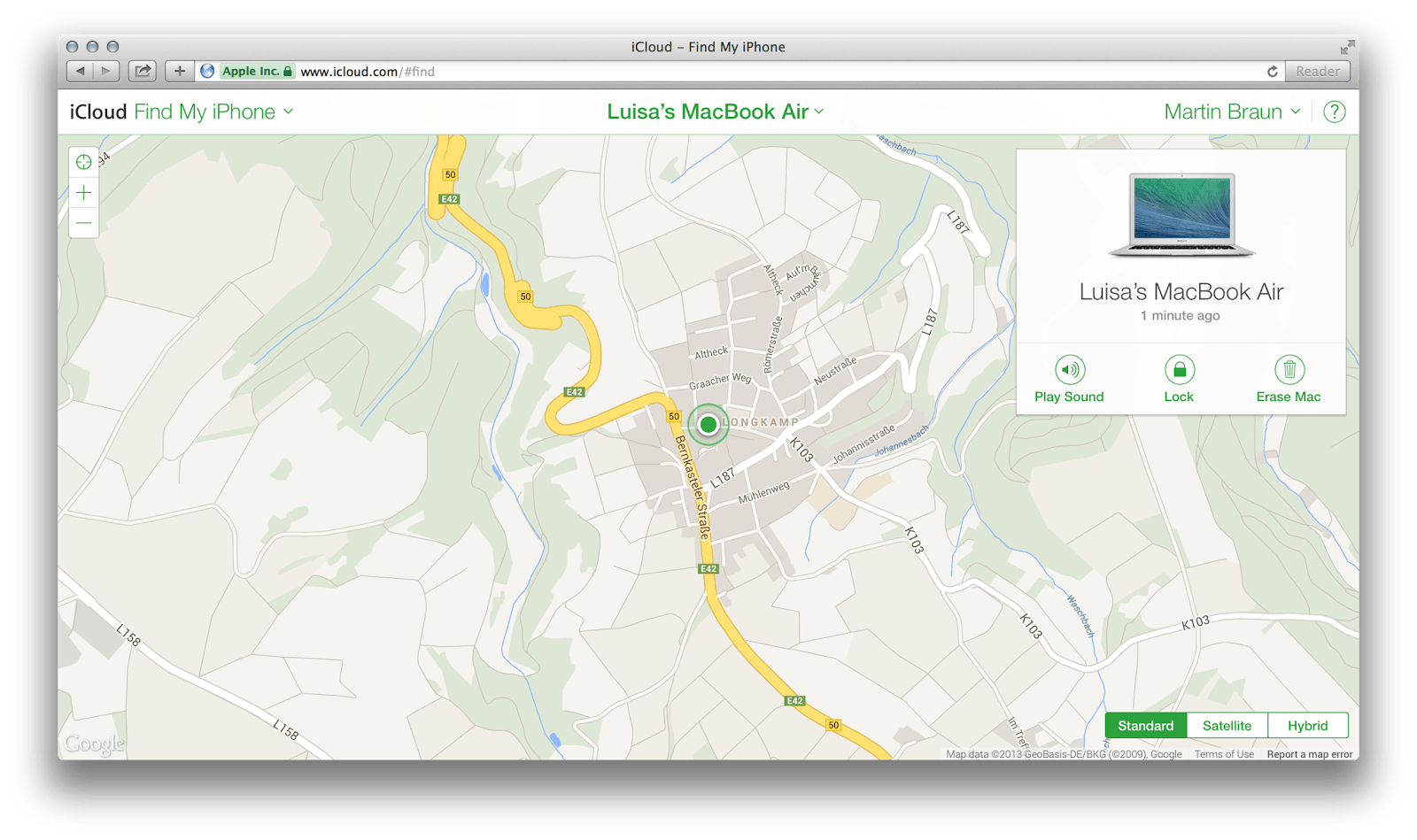

One service that is tightly integrated with many Apple devices, is “Find My iPhone/Mac” (FMI from now on). In a nutshell, it geo-locates devices that are registered with the users Apple-ID in case a device got lost. This works for both phones/tablets by using GPS tracking as well as Laptops using wireless network information for approximate location. The service becomes very valuable over time and more than once it helped to give a good idea on where i’ve left my phone. Other than locating a device on a map it allows basic remote management features like making the device audible, lock it to avoid misuse and wipe it’s data in case it got potentially compromised.

Now, what’s the catch? Well, back in 2011 i bought a MacBook Air and used it for almost two years before selling it online. While using it, i had FMI activated and the device has been assigned to my Apple-ID. Also, i enabled FileVault which is an encryption feature of OSX that encrypts the whole computer storage, which by the way everybody should use. Additionally i’ve wiped all of that storage data to hand over a plain computer to the new owner. There is close to no chance that anybody outside of an intelligence agency or Apple could access my old (account) data. I sold it to one of these fixed-price dealers where you don’t get to know the buyer nor care about what happens with the machine.

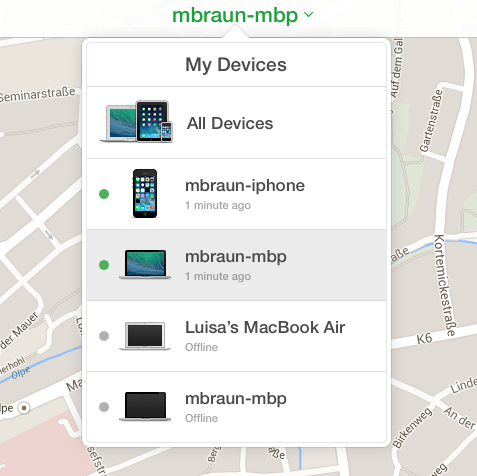

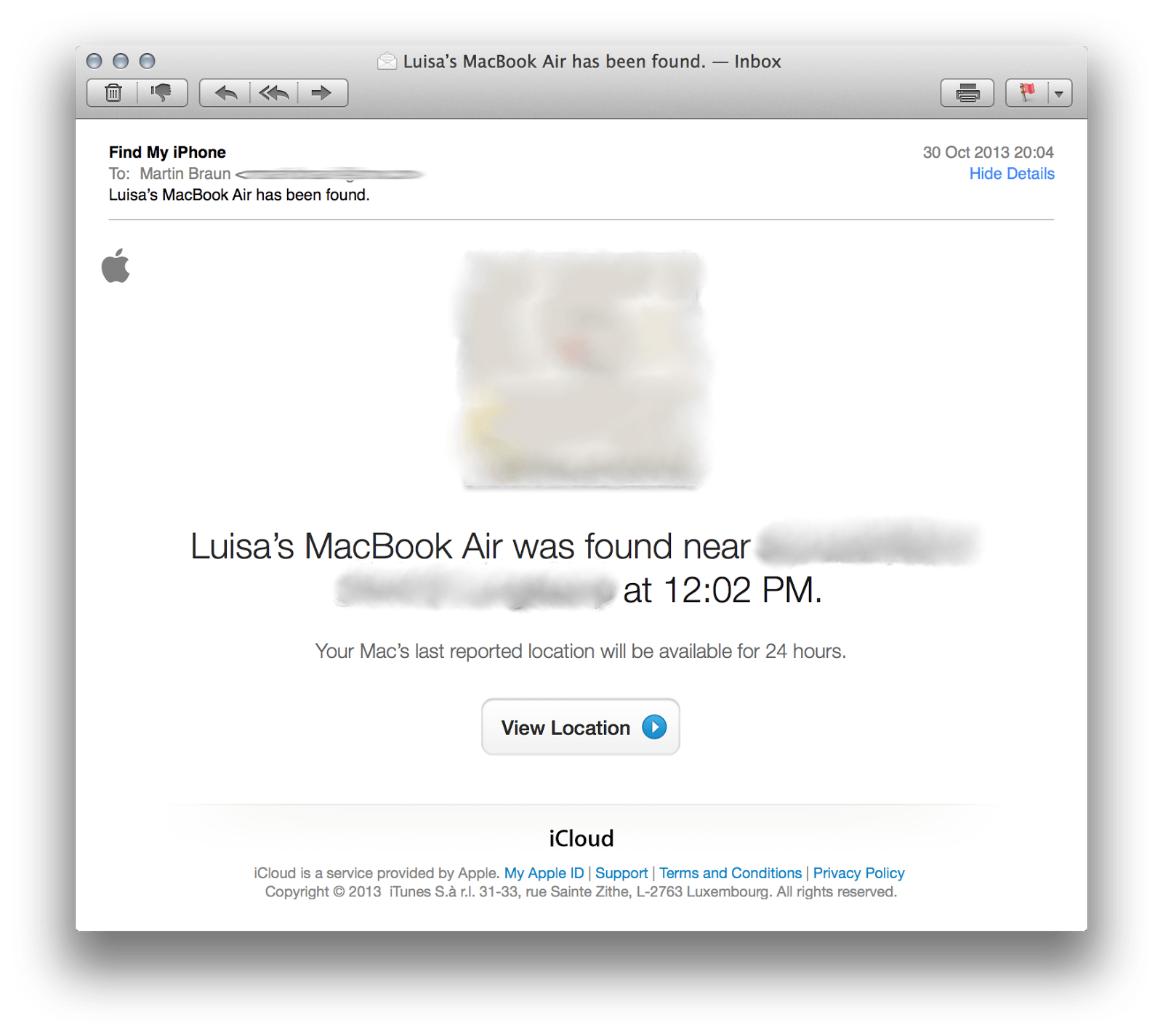

Roughly half a year after i sold that machine, i occasionally checked by FMI to see if there have been updates to the service. To my astonishment, a machine called “Luisa’s Mac Book Air” showed up at my list of machines.

It was not online at the time i checked so i opted for “Notify me when found”. Shortly after, i received an E-Mail. My old Mac Book Air has been found. Yay! Interestingly enough that it has still been linked to my Apple-ID, all the location and remote management feature were still in place. Based on the location information and her name used to generate the computer name, i was able to look up Luisa on various online services. She’s a student and based on the transportation habit of her (my old) Laptop it should be easy to find out where she’s living, whom she’s meeting and what courses she’s taking… Well i’m not interested in stalking people so i took this much information to verify it’s real. Given a bit of criminal energy, i could lock her machine and blackmail her to re-gain access to her data. Not interested in this as well, just sayin’.

There are stories of guys that recovered their stolen machines by hacking into it remotely, track it, identify the thief and all that stuff. Apple, pursuing the idea of “everything simple”, has just taken it a step further. In a nutshell, i got privileged remote access to a machine that has been wiped-clean, re-installed and i had no user account nor information about. Well, that’s one hell of a privilege escalation. Luisa for sure is not using my Apple-ID and has no clue that she can still be tracked.

There are various scenarios on what has happened. She uses FMI and all that iCloud stuff, that would point out a permission issue within iCloud when linking machines to Accounts. Or, she does not use FMI, which is worse since it implies this service is available regardless of the users choice made when setting up the machine. In both cases the new owner of the machine does not seem to have any possibility to opt-out from being tracked by the previous owner.

Digging deeper, i started to suspect that Apple is not really using the owners user or account credentials to get access to the machine. While this may be a logical implementation to track/manage machines where the user is not logged in, it’s quite critical in terms of privacy. A user may enter a strong password and connect the machine to it’s Apple-ID - but in the end it’s just identified and accessed by some kind of unique hardware identifier. At least the FMI web app uses such (hashed) identifiers. It’s a good guess that these IDs are used to bypass authentication at her Laptop. In this case, it is plausible that Apple has remote access to location data of all devices as well as powerful remote management capabilities (wipe, lock…). At the very least, remote access to the machine is not secured using credentials that the user has chosen at it’s Apple-ID but rather a static ID and vague trust-relationship with Apple. Authentication by shared secrets is an issue for itself, however it’s still better than obviously having no secret within the authentication process.

Now, i’ve contacted the Apple product-security contact and described the issue. The contact is very responsive, which definitely is a good sign for taking such issues seriously. However, they came back with the statement, that there is no actual security issue. I (as the previous owner) could just remove my old machine from my FMI account to stop tracking it. Well yeah, that may “help” me not tracking somebody - but it’s definitely not helping the person that gets tracked. Furthermore they pointed to a “how-to” document that describes what actions should be done before selling a Mac (e.g remove it from all iCloud services). This document of course is optional and the new owner has no way of verifying that the previous owner has removed the machine from these kind of services.

So what is this? Bad luck? Poor design? Wrong expectations? One could argue that if a machine gets stolen and logged out, the original user still needs to be able to wipe it. That’s true and valid, but does not require the machine to be bound to the original users Apple-ID for it’s whole lifetime. As soon as somebody wipes the storage or starts over using a different Apple-ID, the connection with FMI should be save to be reset. After all, FMI is not a thievery protection system, it’s a service to find a temporarily lost device. Is Apple doing this kind of hardware-service-lock-in to prevent users from reselling their hardware? Well that could be, but i don’t think they do it on purpose or to push their own “used hardware” service.

IMHO it’s a follow-up issue of the attitude to retain customers and all their credentials, data and devices to a closed ecosystem. This is just one more example where “simplicity over security” has a significant backlash. Also, it illustrates that open source software for infrastructure is not the only key to a more trustworthy and secure environment. Web services are already much more relevant to end-users than operating systems or infrastructure services are. Many of these underlying services have already become invisible. Trusting both web and infrastructure services with sensitive information like unrestricted access to physical location data is a huge problem if the service is running as a complete black box without the user in control of his or her own data.

There is a pressing need to discuss, spread and implement the definition of “who owns what” within the web services world. Right now, most people are extremely naive when blindly accepting these 70 page’ish TOS, since there is no real socially acceptable alternative to many of these services. Users must become sensitive to this an be in a position to claim their ownership on their data. Giving it away to industry giants for free will manifest the current situation and ultimately lead the way to a world with few players dominating not just the market but also their users habits and (digital) life.